Designing Response Systems for Agentic AI Products.

Topics:

Agentic Experience

AIX Designer

AI/UX Design

Gen AI Experience

Anthony Todd

We’re entering a new age of how users consume content. Aside from designing traditional UX, I can also design agentic experiences that will exist in the ecosystems of other users and businesses. This year, I led the design of an AI tool that simplified our site experience, protected proprietary content from scraping, and ultimately be accepted into Google’s AgentSpace.

My role is to define the experience of the response, and how data was presented. I’m able to map how and when data should be surfaced, and present what considerations would be the most helpful for sourcing from content archives. In order to benefit our product, I’ve planned ways to identify intent signals from the user’s conversation, and encouraged sign-ups with persuasive value exchanges while aligning with the goals of my product and engineering teams.

I'm open to joining a product-driven team bringing AI experiences to real users in interesting ways. I’m able to design intuitive, explainable, and trustworthy AI experiences that align with both user needs and product goals. I can contribute my experience planning the foundations for UX strategy, human-AI interaction, and help bring it all to life with cross-team collaboration.

Sound good? Let’s connect.

Case Study

Hold That Thought: Rethinking Interruptibility in Agentic AI Workflows

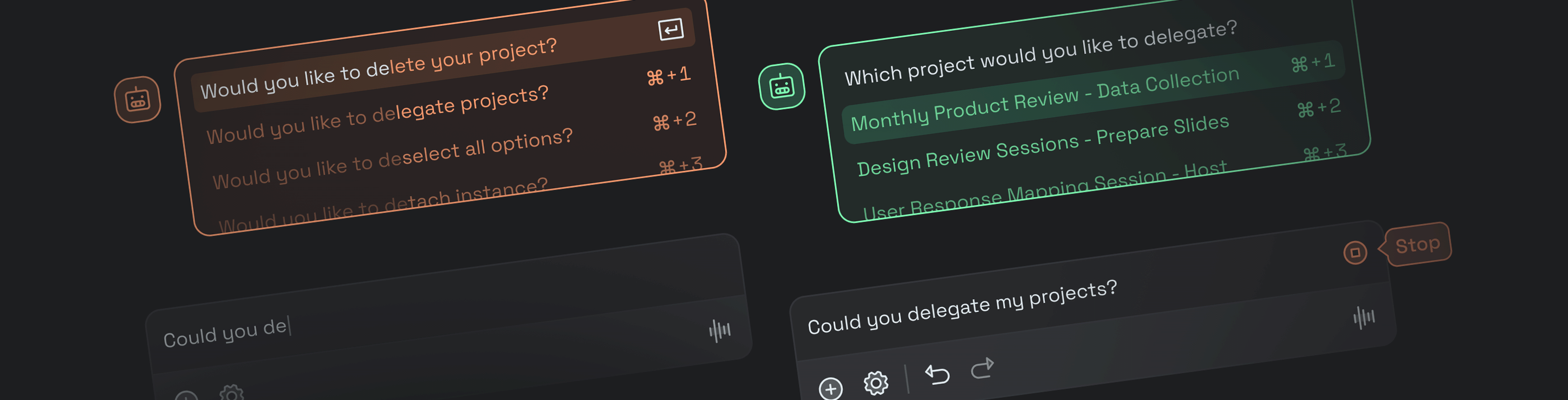

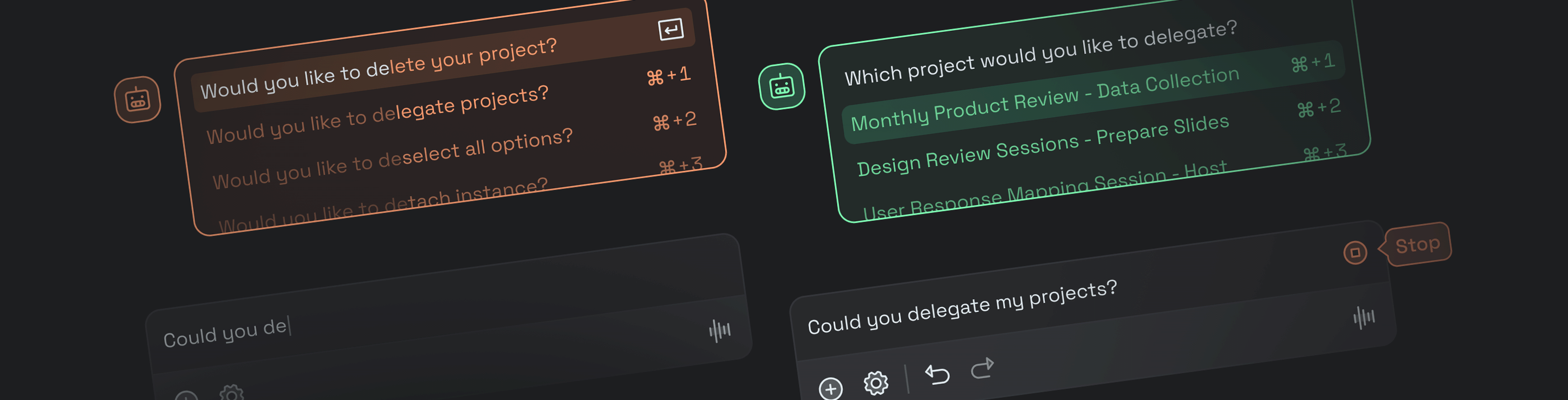

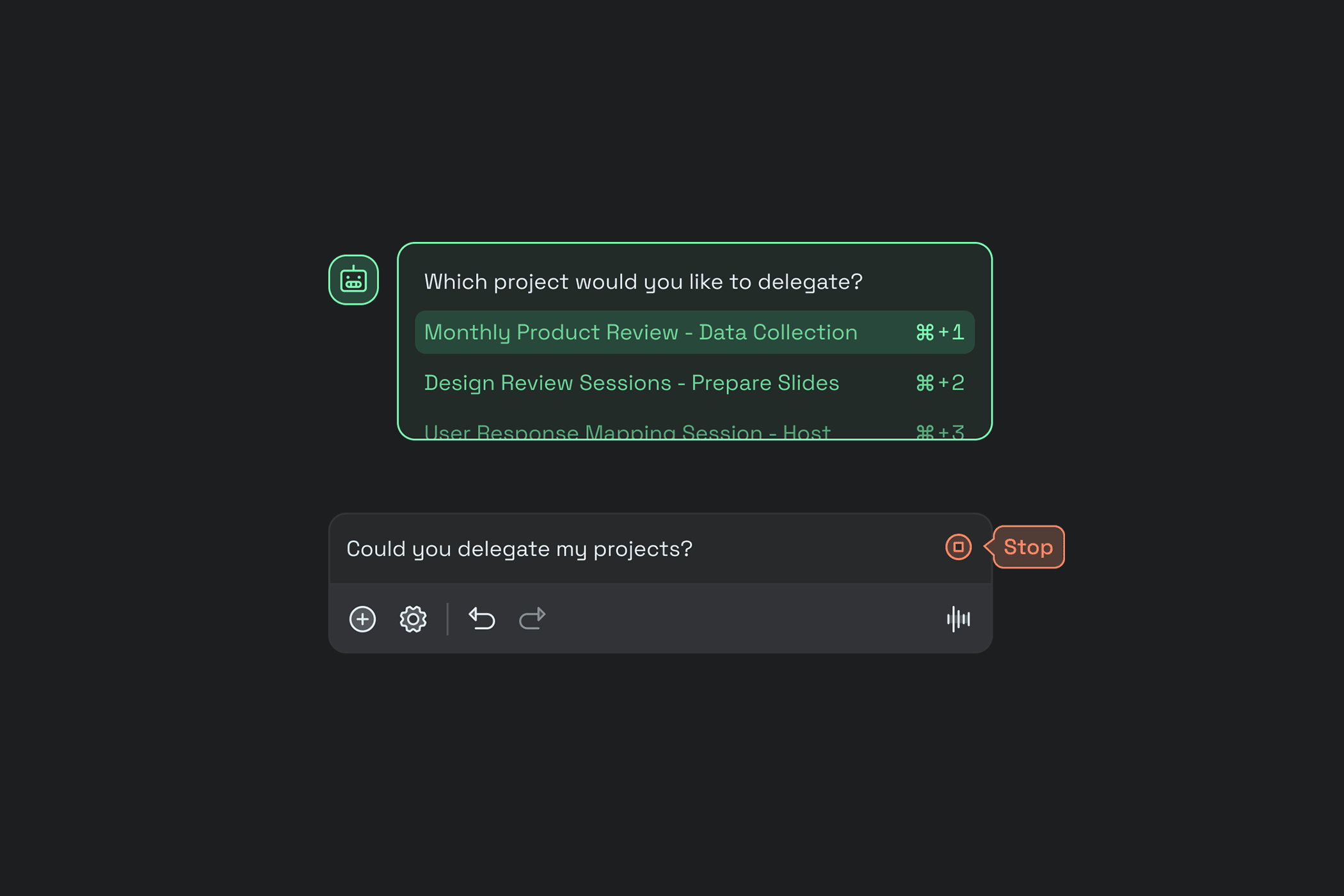

This mock study explores a common tension point in agentic AI design: “how and when a user should interrupt or override a system that has already started acting on their behalf”. I came up with a few speculative but realistic scenarios that highlight recurring patterns in modern AI design. These examples look at how I dissect friction, consider intent ethically, and propose design iterations to rebalance the control between the human and agent.

What’s our challenge?

Qualitative Observations:

In early qualitative interviews, we observed a recurring edge in user tone. People described the agent as "a little too eager," or "jumping the gun" in places where precision was still important. What began as polite language often became emotional, especially when users described a sense of loss. I mean, it’s easy to understand the sheer frustration over a file being overwritten, or an email sent prematurely, or a message drafted in a weird tone they didn’t like. These weren’t technical failures, but something that’s emotionally damaging. Think about it, users weren’t frustrated by the agent’s intelligence. They were frustrated by the careless and inconsiderate experience.

Quantitative Observations:

To back this up quantitatively, we gathered conversation data from intent flagging. Across a sample of 3,200 agent-user interactions, 18 percent included phrases like “not yet,” “wait,” “hold on,” or “stop.” Over 60 percent of those were logged after the agent had already begun an action. In one test, users who felt they had no way to pause or undo an action rated their experience 2.1 out of 5 on trust perception. Those who had visible controls scored it closer to 4.2.

How are users responding?

User One

User Feedback

“I was still thinking when it just... did the thing.”

Why are they saying this?

Hypothesis: The agent is operating on task completion, not task readiness. Users are still in an evaluative mindset, but the AI is interpreting silence as approval. Ethical intent markers in our logs show a significant portion of regret-based prompts happening just after agent execution, suggesting a lack of consent in timing.

User Two

User Feedback

“I didn’t even realise it had started.”

Why are they saying this?

Hypothesis: Visual feedback is either too subtle or absent. Many agent actions, especially those that run in the background, start without strong interface cues. When users can’t visually track what the AI is doing, they lose the opportunity to intervene early.

User Three

User Feedback

“I couldn’t find how to undo it, so I just redid everything manually.”

Why are they saying this?

Hypothesis: There’s no obvious off-ramp. Once something is done, users are either forced to live with it or retrace their steps. Session recordings showed that in 42 percent of post-agent interaction flows, users deleted or rebuilt the output from scratch instead of editing it.

Where can we help users first?

First Priority

What part of the user journey?

Agent Engagement Phase (immediate response window)

Potential Strategy?

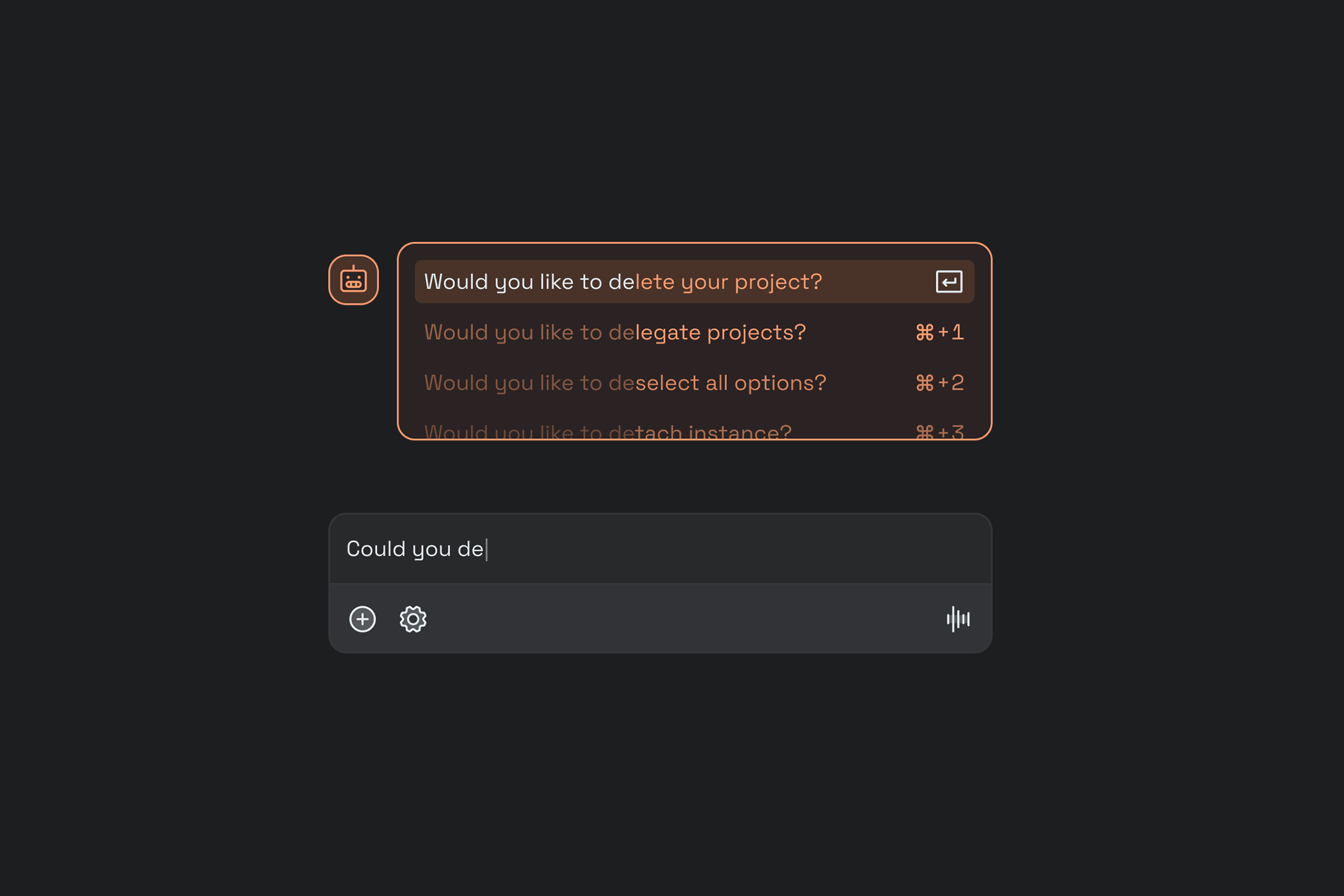

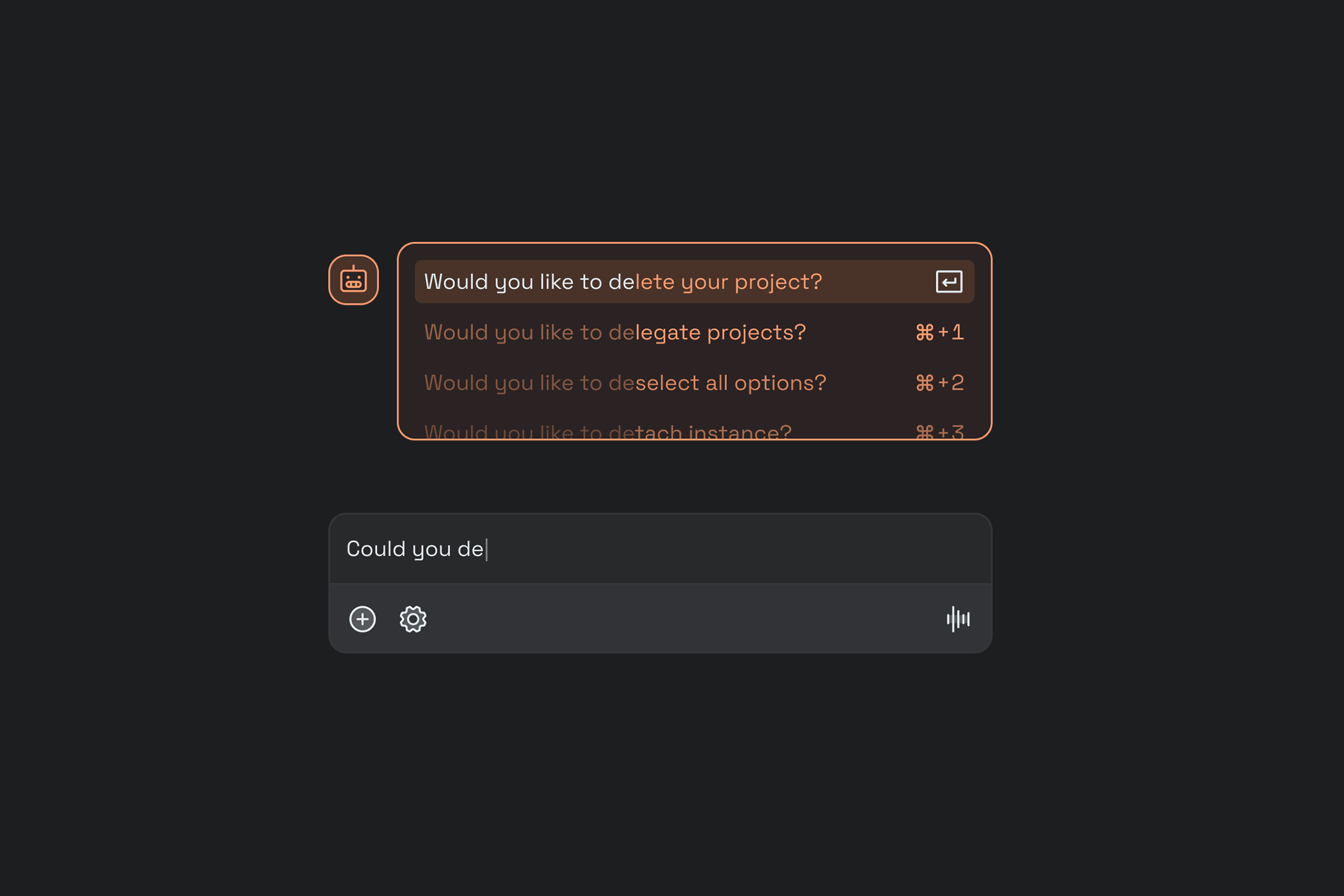

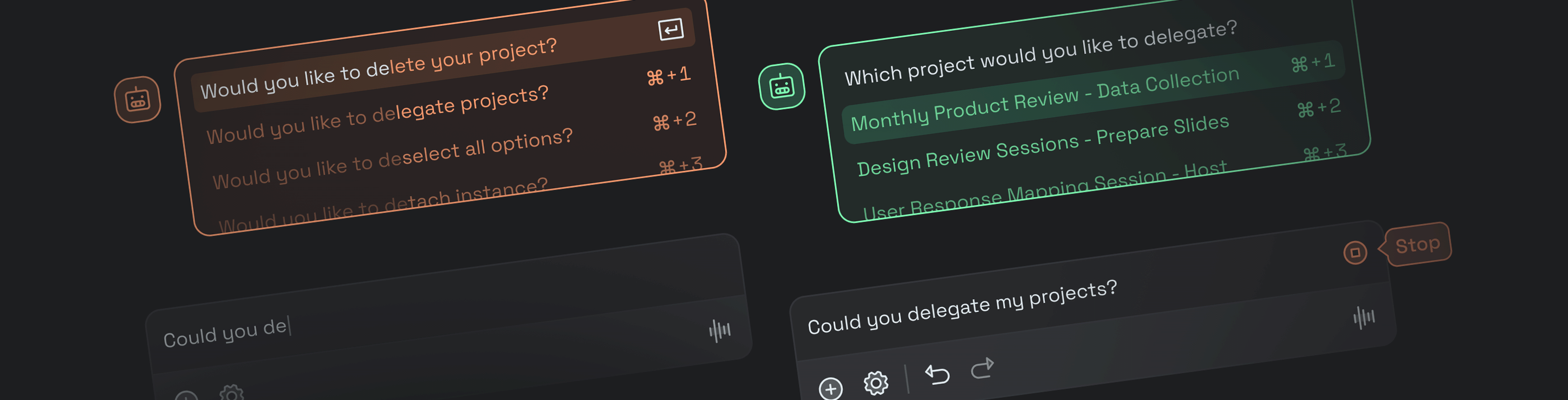

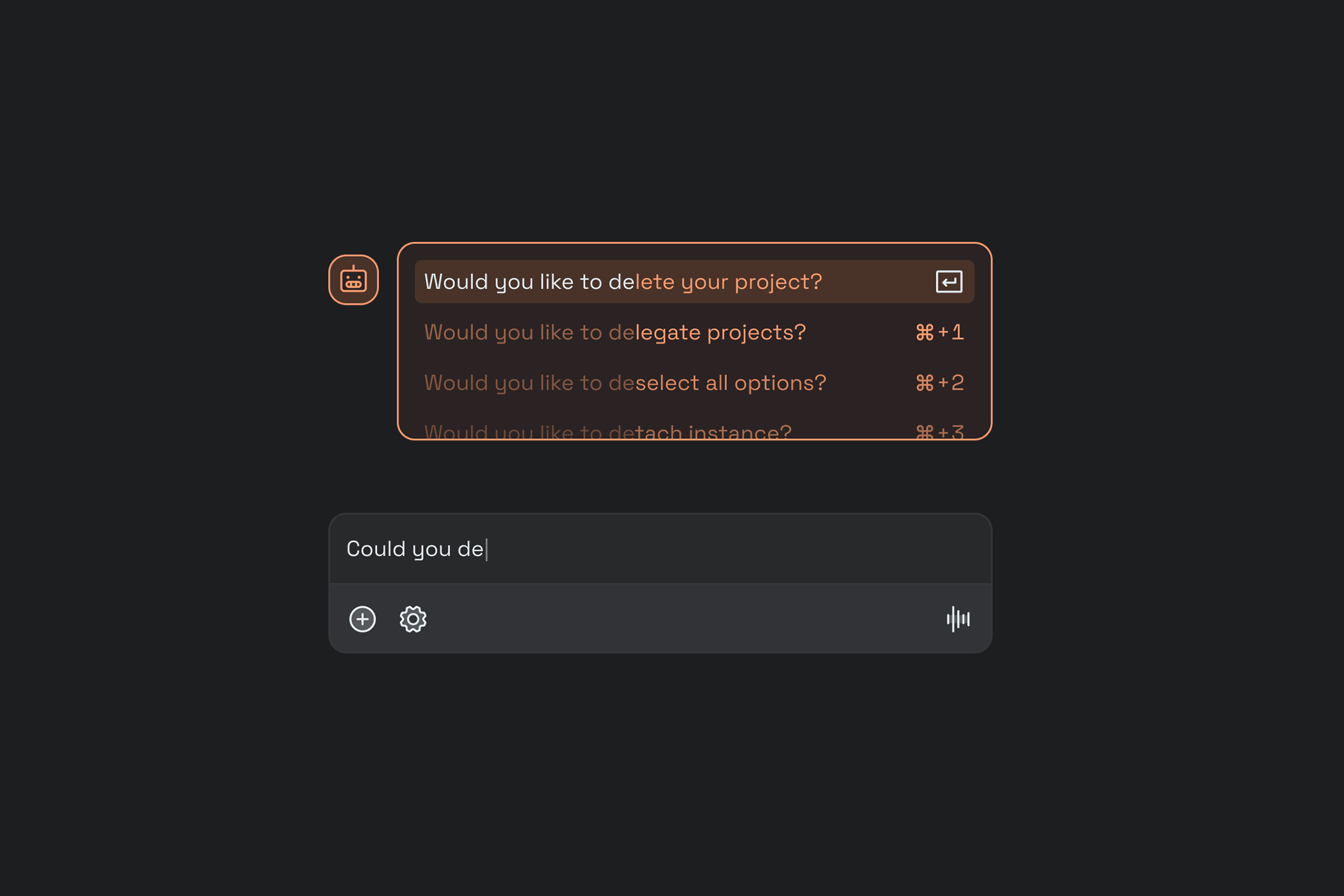

Design for Interruptability Before Completion:

Allow users to see the agent's intent before it commits to action. This can be done through preview states, timers, or visible confirmations. It’s important not to reduce speed, but rather to introduce an interrupt layer that respects the user's own rhythm without creating a lag.

Second Priority

What part of the user journey?

Mid-task Interaction

Potential Strategy?

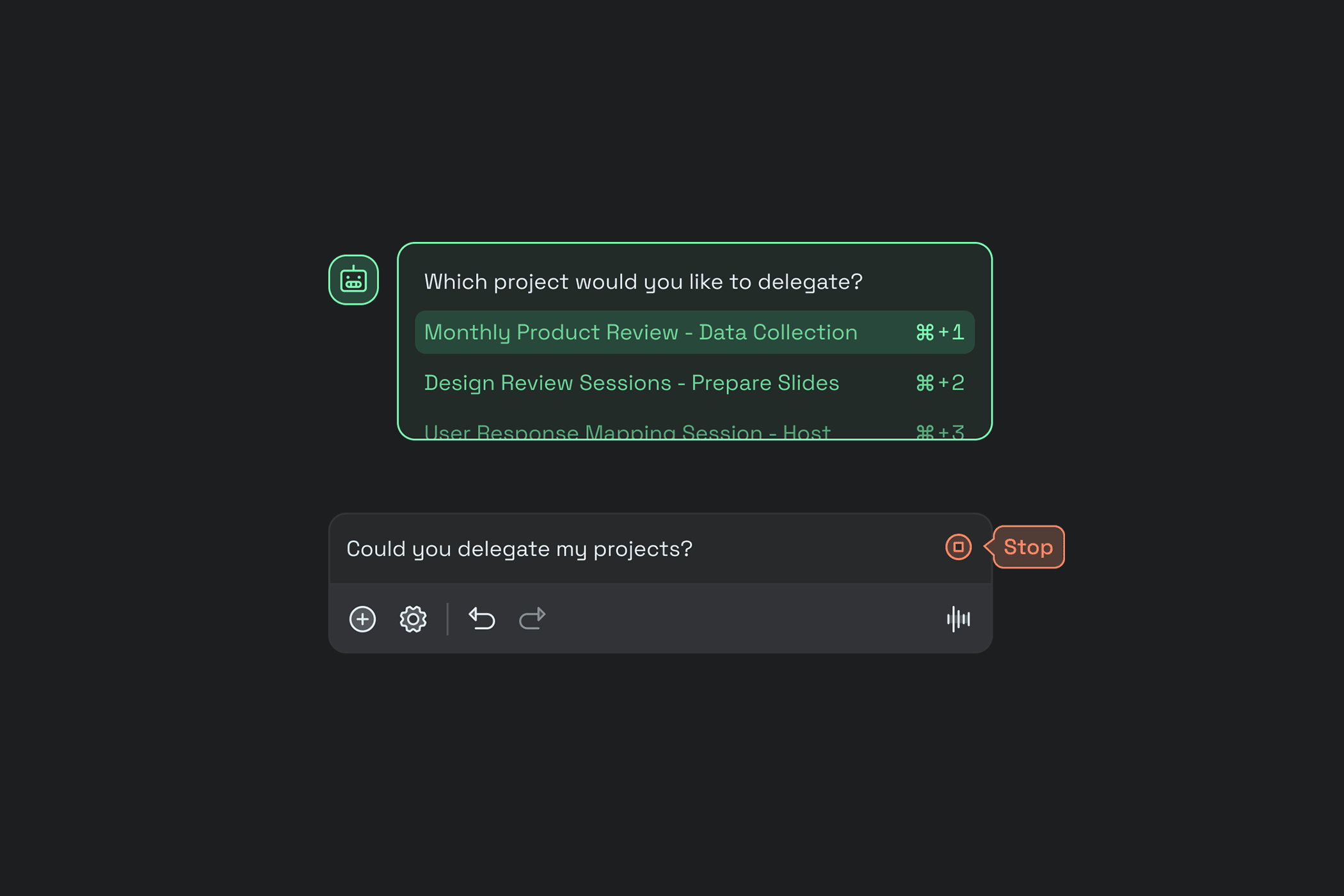

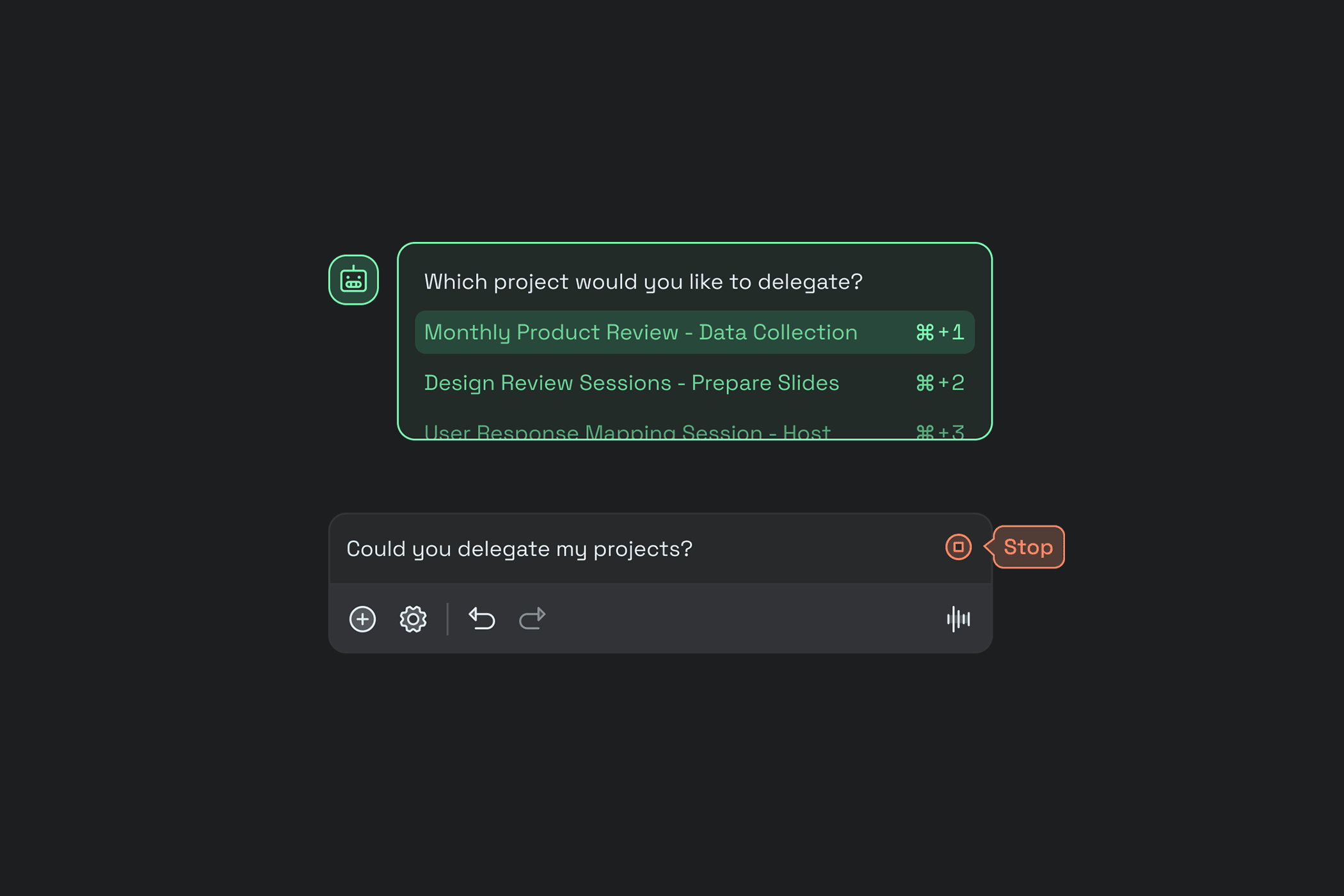

Introduce Persistent “Pause and Revert” Controls:

Designing an always-visible pause or “revert to before AI” button gives users psychological safety. These are not panic buttons. They are creative affordances that allow a human to reassert authorship when the AI moves too far ahead.

Third Priority

What part of the user journey?

Reflection and iteration Phase

Potential Strategy?

Embed Agent Transparency in the Interface:

Many users didn’t know why the agent did what it did. By making the AI’s rationale, rules, or assumptions visible within the UI (even if only in expandable summaries) we could actually reduce surprise. This also helps train user mental models to align with agent logic, especially over repeated use.

What are users saying?

Best Case

What outcome are we hoping for?

Users feel more understood and begin exploring new playlists regularly, leading to a 25% increase in average session length and a 30% uptick in discovery playlist engagement. Positive word-of-mouth about “emotional accuracy” drives organic growth and differentiation in the competitive streaming market.

Here's a potential OKR key result for this outcome:

Reduce agent-initiated user reversals by 50 percent while maintaining task completion time.

Worst Case

What outcomes should we try to avoid?

The agent becomes too passive, requiring manual confirmation for every small task. This slows workflows down and leads to a perception of inefficiency. Trust doesn’t improve, and now neither does speed.

Being Prepared

What should we be prepared for?

Test different thresholds for interruptibility. Not every action needs a pause, but those with irreversible or emotional weight definitely do. Build analytics around friction points and conversation-based regret signals to tune timing models over time.

Product Design

Full Time Work

Portfolio Samples

I'm currently open to full-time roles and freelance collaborations. If my work feels like a good fit for your team or project, feel free to reach out! Also, follow along on my social channels to see what I’m working on next.

Creating

and

collaborating

locally

in

Crested

Butte,

Colorado.

Anthony Todd’s Accumulating

Collection of Design Work

AI Experiences

Full Time Work

Designing Response Systems for Agentic AI Products.

Topics:

Agentic Experience

AIX Designer

AI/UX Design

Gen AI Experience

Anthony Todd

We’re entering a new age of how users consume content. Aside from designing traditional UX, I can also design agentic experiences that will exist in the ecosystems of other users and businesses. This year, I led the design of an AI tool that simplified our site experience, protected proprietary content from scraping, and ultimately be accepted into Google’s AgentSpace.

My role is to define the experience of the response, and how data was presented. I’m able to map how and when data should be surfaced, and present what considerations would be the most helpful for sourcing from content archives. In order to benefit our product, I’ve planned ways to identify intent signals from the user’s conversation, and encouraged sign-ups with persuasive value exchanges while aligning with the goals of my product and engineering teams.

I'm open to joining a product-driven team bringing AI experiences to real users in interesting ways. I’m able to design intuitive, explainable, and trustworthy AI experiences that align with both user needs and product goals. I can contribute my experience planning the foundations for UX strategy, human-AI interaction, and help bring it all to life with cross-team collaboration.

Sound good? Let’s connect.

Case Study

Hold That Thought: Rethinking Interruptibility in Agentic AI Workflows

This mock study explores a common tension point in agentic AI design: “how and when a user should interrupt or override a system that has already started acting on their behalf”. I came up with a few speculative but realistic scenarios that highlight recurring patterns in modern AI design. These examples look at how I dissect friction, consider intent ethically, and propose design iterations to rebalance the control between the human and agent.

What’s our challenge?

Qualitative Observations:

In early qualitative interviews, we observed a recurring edge in user tone. People described the agent as "a little too eager," or "jumping the gun" in places where precision was still important. What began as polite language often became emotional, especially when users described a sense of loss. I mean, it’s easy to understand the sheer frustration over a file being overwritten, or an email sent prematurely, or a message drafted in a weird tone they didn’t like. These weren’t technical failures, but something that’s emotionally damaging. Think about it, users weren’t frustrated by the agent’s intelligence. They were frustrated by the careless and inconsiderate experience.

Quantitative Observations:

To back this up quantitatively, we gathered conversation data from intent flagging. Across a sample of 3,200 agent-user interactions, 18 percent included phrases like “not yet,” “wait,” “hold on,” or “stop.” Over 60 percent of those were logged after the agent had already begun an action. In one test, users who felt they had no way to pause or undo an action rated their experience 2.1 out of 5 on trust perception. Those who had visible controls scored it closer to 4.2.

How are users responding?

User

One

User Feedback

“I was still thinking when it just... did the thing.”

Why are they saying this?

Hypothesis: The agent is operating on task completion, not task readiness. Users are still in an evaluative mindset, but the AI is interpreting silence as approval. Ethical intent markers in our logs show a significant portion of regret-based prompts happening just after agent execution, suggesting a lack of consent in timing.

User

Two

User Feedback

“I didn’t even realise it had started.”

Why are they saying this?

Hypothesis: Visual feedback is either too subtle or absent. Many agent actions, especially those that run in the background, start without strong interface cues. When users can’t visually track what the AI is doing, they lose the opportunity to intervene early.

User

Three

User Feedback

“I couldn’t find how to undo it, so I just redid everything manually.”

Why are they saying this?

Hypothesis: There’s no obvious off-ramp. Once something is done, users are either forced to live with it or retrace their steps. Session recordings showed that in 42 percent of post-agent interaction flows, users deleted or rebuilt the output from scratch instead of editing it.

Where can we help users first?

First

Priority

What part of the user journey?

Agent Engagement Phase (immediate response window)

Potential Strategy?

Design for Interruptability Before Completion:

Allow users to see the agent's intent before it commits to action. This can be done through preview states, timers, or visible confirmations. It’s important not to reduce speed, but rather to introduce an interrupt layer that respects the user's own rhythm without creating a lag.

Second

Priority

What part of the user journey?

Mid-task Interaction

Potential Strategy?

Introduce Persistent “Pause and Revert” Controls:

Designing an always-visible pause or “revert to before AI” button gives users psychological safety. These are not panic buttons. They are creative affordances that allow a human to reassert authorship when the AI moves too far ahead.

Third

Priority

What part of the user journey?

Goal-oriented Exploration Phase

Potential Strategy?

Embed Agent Transparency in the Interface:

Many users didn’t know why the agent did what it did. By making the AI’s rationale, rules, or assumptions visible within the UI (even if only in expandable summaries) we could actually reduce surprise. This also helps train user mental models to align with agent logic, especially over repeated use.

What are users saying?

Best

Case

What outcome are we hoping for?

Users feel more understood and begin exploring new playlists regularly, leading to a 25% increase in average session length and a 30% uptick in discovery playlist engagement. Positive word-of-mouth about “emotional accuracy” drives organic growth and differentiation in the competitive streaming market.

Here's a potential OKR key result for this outcome:

Reduce agent-initiated user reversals by 50 percent while maintaining task completion time.

Worst

Case

What outcomes should we try to avoid?

The agent becomes too passive, requiring manual confirmation for every small task. This slows workflows down and leads to a perception of inefficiency. Trust doesn’t improve, and now neither does speed.

Being

Prepared

What should we be prepared for?

Test different thresholds for interruptibility. Not every action needs a pause, but those with irreversible or emotional weight definitely do. Build analytics around friction points and conversation-based regret signals to tune timing models over time.

Product Design

Full Time Work

Portfolio Samples

I'm currently open to full-time roles and freelance collaborations. If my work feels like a good fit for your team or project, feel free to reach out! Also, follow along on my social channels to see what I’m working on next.

Creating

and

collaborating

locally

in

Crested

Butte,

Colorado.

Anthony Todd’s Accumulating

Collection of Design Work

AI Experiences

Full Time Work

Designing Response Systems for Agentic AI Products.

Topics:

Agentic Experience

AIX Designer

AI/UX Design

Gen AI Experience

Anthony Todd

We’re entering a new age of how users consume content. Aside from designing traditional UX, I can also design agentic experiences that will exist in the ecosystems of other users and businesses. This year, I led the design of an AI tool that simplified our site experience, protected proprietary content from scraping, and ultimately be accepted into Google’s AgentSpace.

My role is to define the experience of the response, and how data was presented. I’m able to map how and when data should be surfaced, and present what considerations would be the most helpful for sourcing from content archives. In order to benefit our product, I’ve planned ways to identify intent signals from the user’s conversation, and encouraged sign-ups with persuasive value exchanges while aligning with the goals of my product and engineering teams.

I'm open to joining a product-driven team bringing AI experiences to real users in interesting ways. I’m able to design intuitive, explainable, and trustworthy AI experiences that align with both user needs and product goals. I can contribute my experience planning the foundations for UX strategy, human-AI interaction, and help bring it all to life with cross-team collaboration.

Sound good? Let’s connect.

Case Study

Hold That Thought: Rethinking Interruptibility in Agentic AI Workflows

This mock study explores a common tension point in agentic AI design: “how and when a user should interrupt or override a system that has already started acting on their behalf”. I came up with a few speculative but realistic scenarios that highlight recurring patterns in modern AI design. These examples look at how I dissect friction, consider intent ethically, and propose design iterations to rebalance the control between the human and agent.

What’s our challenge?

Qualitative Observations:

In early qualitative interviews, we observed a recurring edge in user tone. People described the agent as "a little too eager," or "jumping the gun" in places where precision was still important. What began as polite language often became emotional, especially when users described a sense of loss. I mean, it’s easy to understand the sheer frustration over a file being overwritten, or an email sent prematurely, or a message drafted in a weird tone they didn’t like. These weren’t technical failures, but something that’s emotionally damaging. Think about it, users weren’t frustrated by the agent’s intelligence. They were frustrated by the careless and inconsiderate experience.

Quantitative Observations:

To back this up quantitatively, we gathered conversation data from intent flagging. Across a sample of 3,200 agent-user interactions, 18 percent included phrases like “not yet,” “wait,” “hold on,” or “stop.” Over 60 percent of those were logged after the agent had already begun an action. In one test, users who felt they had no way to pause or undo an action rated their experience 2.1 out of 5 on trust perception. Those who had visible controls scored it closer to 4.2.

How are users responding?

User

One

User Feedback

“I was still thinking when it just... did the thing.”

Why are they saying this?

Hypothesis: The agent is operating on task completion, not task readiness. Users are still in an evaluative mindset, but the AI is interpreting silence as approval. Ethical intent markers in our logs show a significant portion of regret-based prompts happening just after agent execution, suggesting a lack of consent in timing.

User

Two

User Feedback

“I didn’t even realise it had started.”

Why are they saying this?

Hypothesis: Visual feedback is either too subtle or absent. Many agent actions, especially those that run in the background, start without strong interface cues. When users can’t visually track what the AI is doing, they lose the opportunity to intervene early.

User

Three

User Feedback

“I couldn’t find how to undo it, so I just redid everything manually.”

Why are they saying this?

Hypothesis: There’s no obvious off-ramp. Once something is done, users are either forced to live with it or retrace their steps. Session recordings showed that in 42 percent of post-agent interaction flows, users deleted or rebuilt the output from scratch instead of editing it.

Where can we help users first?

First

Priority

What part of the user journey?

Agent Engagement Phase (immediate response window)

Potential Strategy?

Design for Interruptibility Before Completion:

Allow users to see the agent's intent before it commits to action. This can be done through preview states, timers, or visible confirmations. It’s important not to reduce speed, but rather to introduce an interrupt layer that respects the user's own rhythm without creating a lag.

Second

Priority

What part of the user journey?

During and Immediately After The Task Completion Phase

Potential Strategy?

Introduce Persistent “Pause and Revert” Controls:

Designing an always-visible pause or “revert to before AI” button gives users psychological safety. These are not panic buttons. They are creative affordances that allow a human to reassert authorship when the AI moves too far ahead.

Third

Priority

What part of the user journey?

Reflection and iteration Phase

Potential Strategy?

Embed Agent Transparency in the Interface:

Many users didn’t know why the agent did what it did. By making the AI’s rationale, rules, or assumptions visible within the UI (even if only in expandable summaries) we could actually reduce surprise. This also helps train user mental models to align with agent logic, especially over repeated use.

What’s the potential impact?

Best

Case

What outcome are we hoping for?

Users gain a sense of co-creation instead of being overridden. Task trust improves. Regret-based undo behavior drops by 50 percent, and users report higher satisfaction when working with autonomous agents.

Here's a potential OKR key result for this outcome:

Reduce agent-initiated user reversals by 50 percent while maintaining task completion time.

Worst

Case

What outcomes should we try to avoid?

The agent becomes too passive, requiring manual confirmation for every small task. This slows workflows down and leads to a perception of inefficiency. Trust doesn’t improve, and now neither does speed.

Being

Prepared

What should we be prepared for?

Test different thresholds for interruptibility. Not every action needs a pause, but those with irreversible or emotional weight definitely do. Build analytics around friction points and conversation-based regret signals to tune timing models over time.

Product Design

Full Time Work

Graphic Design

Freelance Work

I'm currently open to full-time roles and freelance collaborations. If my work feels like a good fit for your team or project, feel free to reach out! Also, follow along on my social channels to see what I’m working on next.

Creating

and

collaborating

locally

in

Crested

Butte,

Colorado.